Today we live in a world where we're constantly being inundated with flashy moving things, content tailored to three second attention spans, and now AI slop. So Andy Wardlaw of LampLight Radio Play and I (H.M. Radcliff) decided to make something simple.

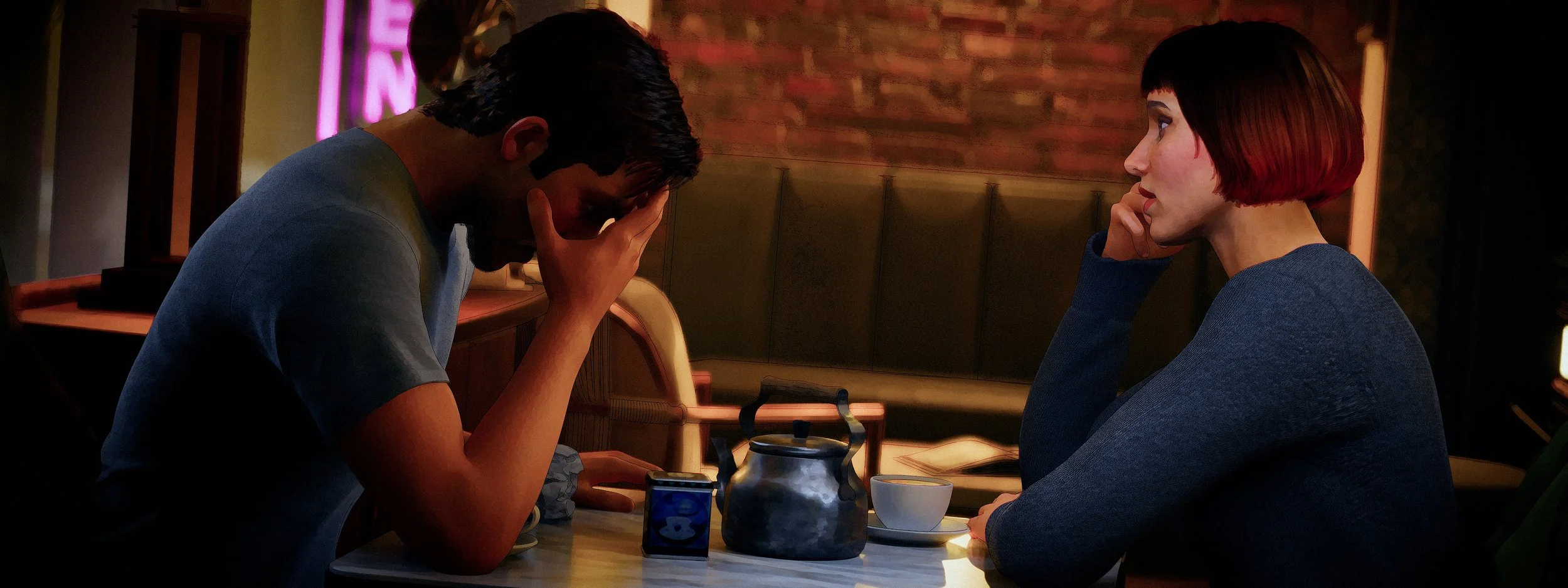

Inspired by the 1962 film La Jetée, "The Perfect Present" is a visual adaptation of Andy’s short audio story of the same name, which was originally produced as part of the Equinox anthology podcast. Instead of animating every frame, we froze the story world, letting the environment, props, body language, and vocal performances do the heavy lifting.

The cafe environment was made in Unreal Engine and every frame was carefully art directed. Stylistically, the film uses a Kuwahara filter to emulate a painterly effect to break the uncanny valley and enhance the scene’s lighting and color palette.

The short film will be appearing in film festivals in 2026. In the meantime, below is a breakdown of how it was made.

TOOLS: Blender, Substance Painter, Unreal Engine

THE CONCEPT

The first phase of the project was deciding on the storytelling approach and aesthetic. Andy and I knew we wanted to tell the story through stills centered on a handful of important props. We didn’t want to make the characters themselves the focus visually, reducing the project to an over-the-shoulder style two-way conversation. We wanted the location to be a main character, and the simplicity of the approach to stand in stark contrast to the story’s themes.

Pinterest was the logical choice for creating a mood board for the short. There, we were able to see the aesthetic pairing of an art-deco-inspired cafe located in a New England style city, and lite cyberpunk visual elements to indicate that this is a story that takes place in the future.

The marriage of exposed brick, dark wood, brass, jewel tones, and colored lights felt right.

Building the Cafe

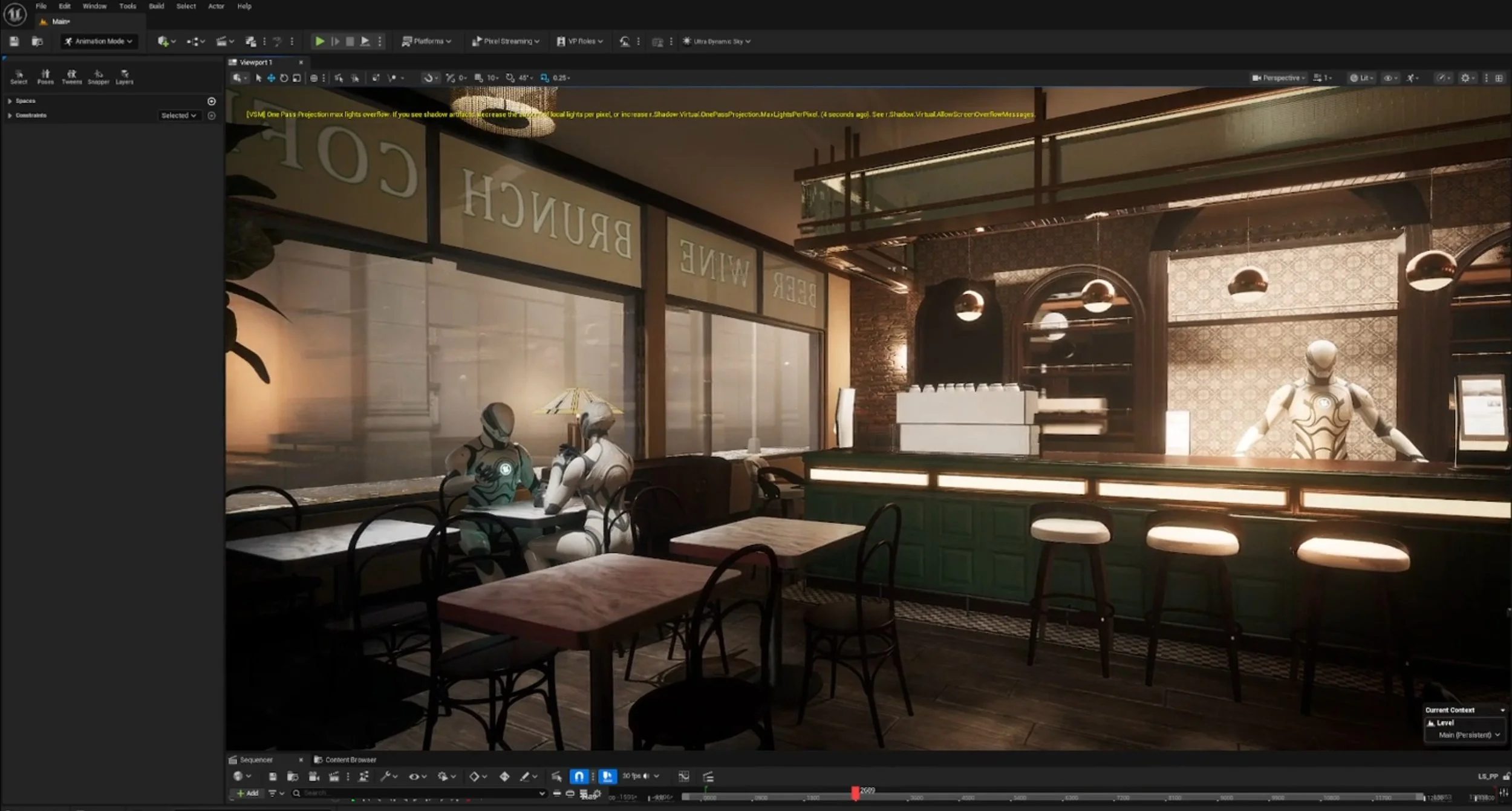

The scene has three parts: the cafe interior, the cafe building exterior, and the surrounding cityscape. Each zone came with its own considerations and challenges. The cafe interior would need extensive set direction, props, and extras. The building exterior needed to exude a lived-in neighborhood vibe. The surrounding neighborhood was the lowest priority consideration; it had to look urban but sleepy. And since the story takes place around Christmas, some lite snowfall would be appropriate.

The building itself was modeled in Blender, and textured in Substance Painter.

Fun fact: the name of the cafe, “Noise Floor,” is an homage to a small community of audio fiction creators, many of whom participated in the audio portion of the film as background voices, minor characters, or names called out for fictional coffee orders.

After the cafe was completed, it was imported into Unreal Engine for major blocking, and to make sure the scene was working at the highest level.

The street scene was populated using the Downtown City Pack toolkit. The scene’s snow-covered cobblestones streets were modeled in Unreal Engine (5.6) using re-meshing and displacement techniques with snow and brick textures from FAB marketplace.

The cafe interior was made from a mixture of assets from FAB, CGTrader, and room dividers modeled in Blender. The snow itself, which ultimately gives the film a “snow globe” feeling, is thanks to Ultra Dynamic Sky, a blueprint system for generating weather within Unreal Engine. The interior uses a mixture of 2k and 4k materials, which was sufficient since we would be using a filter on the final images (keep reading).

CHARACTERS

The characters were created using Metahuman Creator (5.5 and 5.6), with legacy Metahumans imported and migrated into 5.6 for further customization and rigging using 5.5 clothing assets. A single keyframe pose was needed for each critical story beat.

Because Metahumans as so realistic out of the box, which can be distracting, we wanted to explore methods for dialing back the realism. We used a Kuwahara post process material by Florian Schmoldt, which achieved a painterly effect.

The background characters received the same treatment. Because of the effect, the metahumans were rendered at a max of LOD 2 to cut back on unnecessary details, which helped to optimize the scene.

We can’t show the results just yet as we are just beginning the festival submission process. But if you’re interested in following along, follow @packhowl on Instagram for teasers and updates.